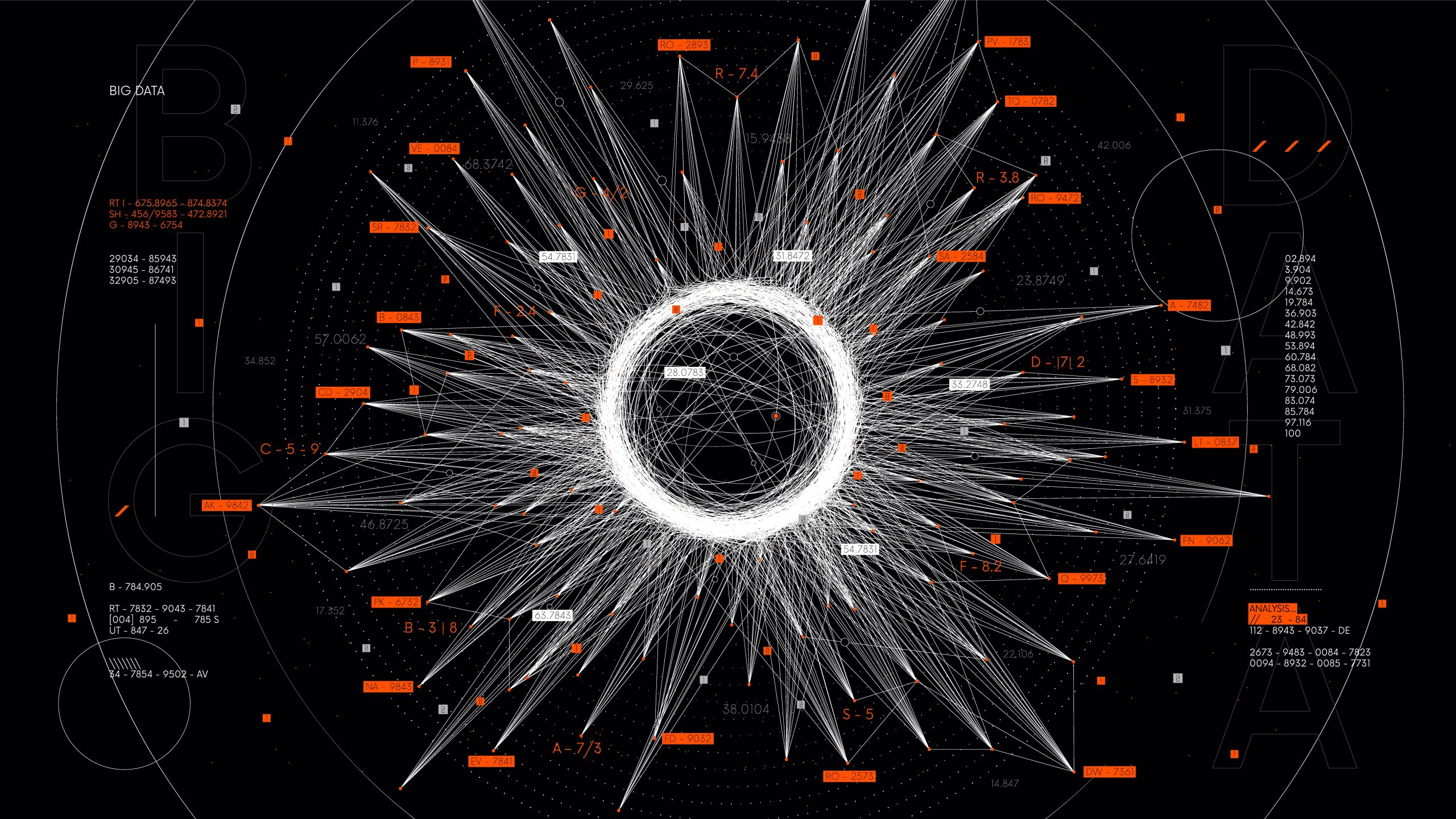

Big Data Analytic

Big Data Analytics involves meticulously capturing, processing, and interpreting extensive and intricate datasets. This process employs advanced tools to analyze data, revealing patterns and correlations that inform decision-making. It enables understanding of user behaviors, market trends, and system performance, enhancing cybersecurity measures and predicting future developments. By embracing Big Data Analytics, organizations harness data’s value for innovation and competitive advantage in a data-driven landscape.

Data Analysis

and Visualization

Performing in-depth data analysis and creating visualizations to derive insights from large datasets. This involves using tools like SQL, Python, R, or specialized big data processing frameworks like Hadoop and Spark. The scope includes understanding business requirements, data cleansing, data transformation, and presenting the findings through interactive dashboards and reports.

Machine Learning

and Predictive Analytics

Implementing machine learning models to make predictions and recommendations based on historical data. This scope involves data preprocessing, feature engineering, model training, and evaluation. Machine learning algorithms are used to build predictive models for various IT applications, such as anomaly detection, customer churn prediction, and recommendation systems.

Data Engineering and ETL

(Extract, Transform, Load)

Designing and implementing data pipelines to extract data from various sources, transform it into a usable format, and load it into the target data repositories. This scope includes working with data integration tools, databases, and cloud-based data services. Data engineers play a crucial role in ensuring data quality, data consistency, and data accessibility for analytics purposes.

Big Data Infrastructure

and Architecture

Working on the design, setup, and maintenance of big data infrastructure and architecture. This includes selecting appropriate data storage solutions, data processing frameworks, and data distribution mechanisms. The scope involves optimizing the infrastructure for performance, scalability, and cost-effectiveness while ensuring data security and compliance.